Pedro Cruz

PCIe hot-plugging – A total pain. Or is it?

Hot-swapping PCIe Gen 4 Devices on a consumer-grade setup

At Quarch, we hot-swap drives hundreds (sometimes thousands) of times during the development of a new product. This is to ensure our application notes are tested properly and the host and device remain totally unaffected by our interposer (so, we’re an entirely transparent layer). Usually, to do this, we would rely on enterprise-grade hosts and systems that natively support hot-plugging of drives. (The Dell Poweredge R720 server is used by UNH-IOL for our hot-swap testing at the NVMe plugfest events.)

Since the introduction of social distancing measures, our engineers are working remotely with very occasional and controlled visits to the lab. This introduced a challenge: how could we give them access to hot-plug capable workstations at their home?

The Youtube channel Linus Tech Tips showed how frustrating PCIe hot plugging can be. They used an Asus WS C621E SAGE motherboard, and after some BIOS tweaking, they got it working, but it was a struggle.

So how do we solve this? Well, automation is at the core of what we do, and the first measure was to set up remote access to the test station in the lab. This worked well for most applications, but some R&D projects still required a lower level of interaction with the hardware—swapping DUTs and probing lanes, for instance.

From here, we had two options: give each engineer a rack-mounted server and hardware worth several thousands and pray their cats wouldn’t end up sleeping on it, or… find a hot-swap-capable system based on consumer-grade equipment that could fit in a standard PC case.

We went for the latter. Sorry, cats.

BIOS Memory Allocation

To understand why it is so hard to support PCIe hot-plugging, first we need to understand what happens from the moment you press the power button on your PC until you can log into your OS.

One of the most important events is the creation of a pool of resources—a list of all the hardware you have connected to your motherboard. That is done by your BIOS, before the OS is loaded. Memory will be allocated for each device, and this will remain static until the next power cycle. If you try to connect a new video card while the host is on, the chipset will not allocate memory for that and your system might become unstable: from GUI freezing to BSoD. On a side note, several of our customers – specially the hardware integrators – use our products to automate this kind of test and create systems that handle this kind of event better.

Synthetic Devices

Our goal here is to come up with a system that is hot-plug capable at the application level, not necessarily at the BIOS level. We could, for instance, reserve the positions in the memory at the time of boot but only expose the resource to the OS once the device is actually connected to the slot. This is called a synthetic device. For all purposes, the BIOS sees a device (or multiple) connected to the system and reserves the memory, but the OS will list it as a synthetic generic device while the hardware is unplugged.

Once you plug the drive (or video card or any PCIe device for that matter), the synthetic device will expose all the functionalities of the real hardware to the OS. Once you unplug it, it will go back to being a synthetic generic device, and so on.

We can create these synthetic devices using a PCIe switch. Our US partners at SerialCables make an AIC host card with a switch that is just perfect for our application. This particular device used a Broadcom part, but Microsemi is also available.

Setting Up The System

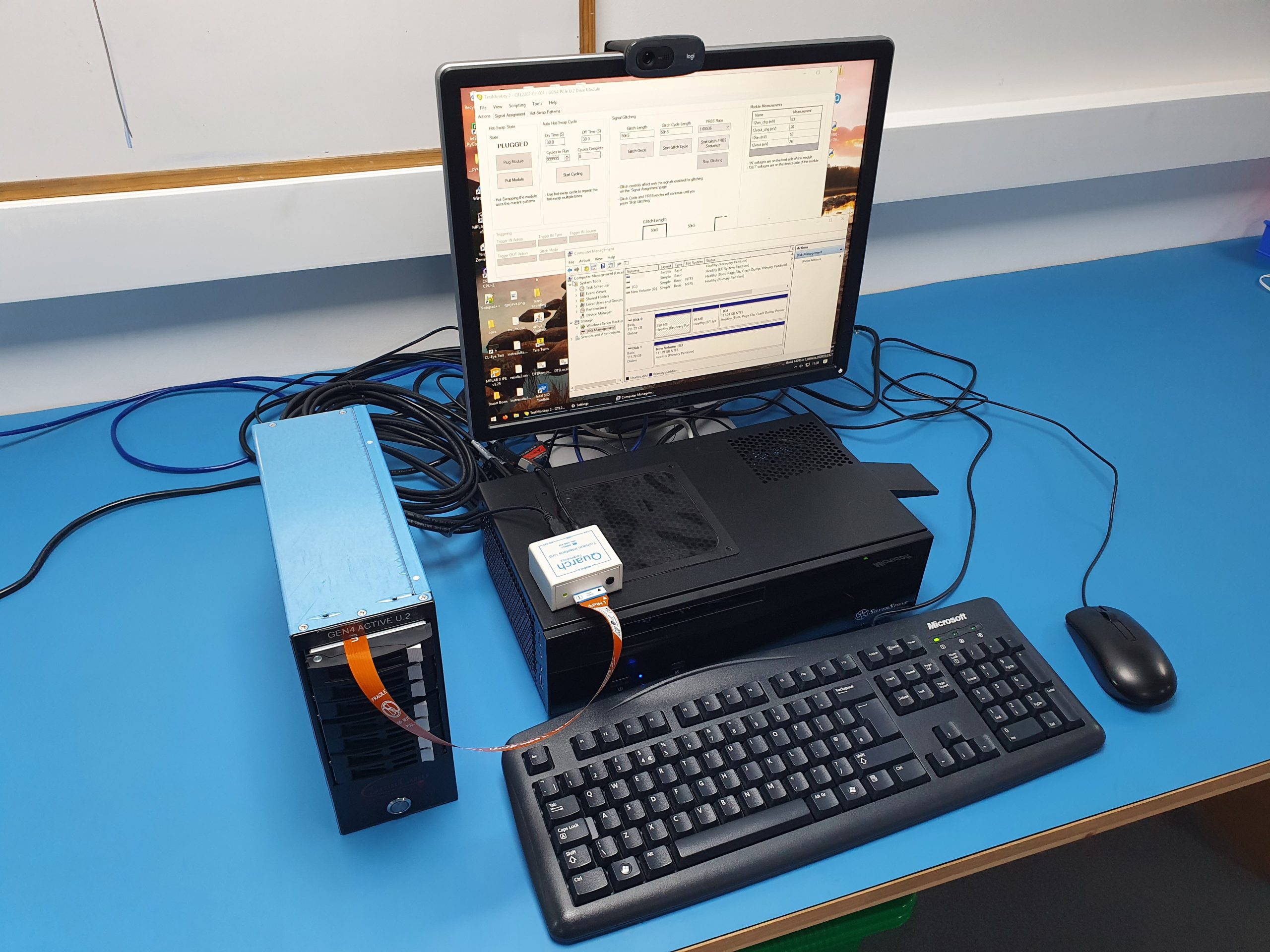

For this build, we are going to use a standard ASUS ROG mini-motherboard and an i7 Intel processor. There’s nothing special about this setup: it was intended to offer good performance in a small form factor without spending too much.

The magic happens at the PCIe Gen4 x16 SFF-8644 Host Card by Serial Cables.

This AIC card supports Gen4 speeds (if you have a compatible motherboard) and can connect to an enclosure or a simple cable. Using a JBOD like Serial Cable’s active U.2 JBOF allows for multiple drives to be connected with one host card. A SFF-8644 to SFF-8639 cable is a simple, quiet, and cheap way to plug your NVMe drives.

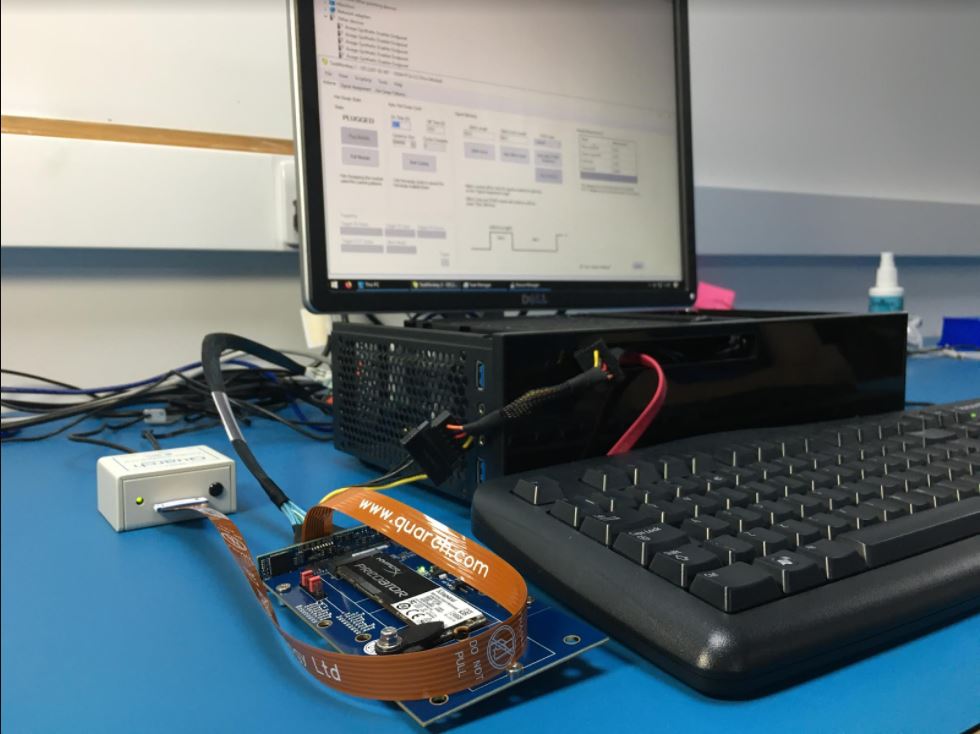

Quarch Breaker

This setup allows you to use one of our U.2 Breakers to automate hot-plugging events while preventing the system from hanging or crashing—something common when hot-plugging PCIe devices on consumer-grade setups. We ran our tests on a M.2 drive with an adapter by Serial Cables.

Video: Watch as the M.2 drive disappears from the system when unplugged and then reappears when reconnected